Written by Nikola Ljubešić and Tomaž Erjavec, edited by Darja Fišer

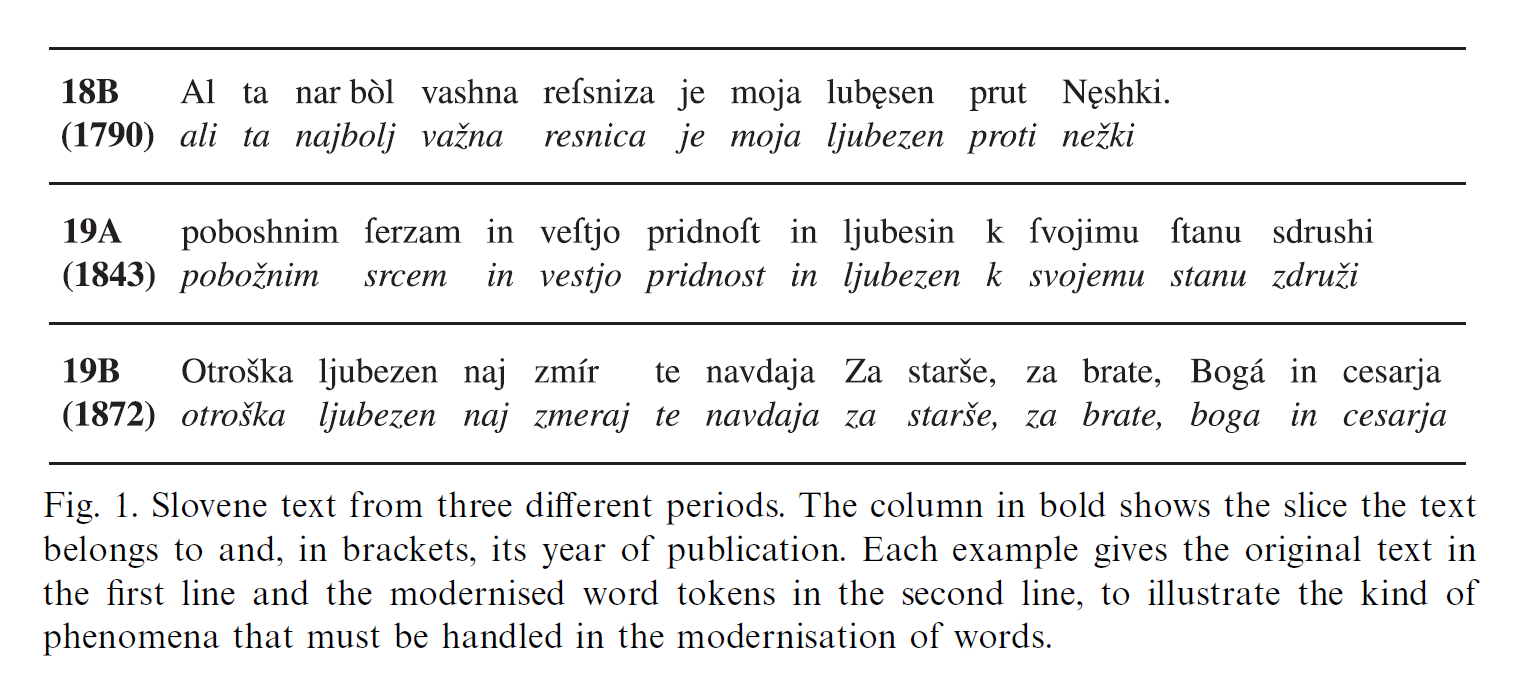

A well known problem with using text annotation tools that have been trained on datasets of standard language for texts written in non-standard language, such as dialects, historical varieties, or user-generated content, is that the results are drastically decreased. A common approach to overcome this problem is to first normalize (i.e., modernize or standardize) the non-standard text and only then proceed with further processing. As an additional benefit, normalization of non-standard texts also simplifies searching in such text collections.

CSMTiser, available on the CLARIN.SI GitHub site, is a supervised machine learning tool that performs word normalization by using Character-level Statistical Machine Translation. The tool is a wrapper around the well known Moses SMT package, which enables non-computer-scientists to train and run a text normalizer by editing the configuration file, running a script for training the normalizer, and then another one for applying it.

The tool has been very efficient in modernizing historical Slovene (Scherrer and Erjavec, 2016) and Slovene user-generated content (Ljubešić et al. 2016). It has also been successfully applied to normalize Swiss dialects to a common denominator (Scherrer and Ljubešić, 2016) and to modernise historical Dutch for the purposes of further processing (Tjong Kim Sang, 2017) within a shared task in which the CSMTiser ranked first among eight teams, many of which applied neural approaches. The success of the CSMTiser shows the strength of a simple, yet powerful approach to text normalization. Even today, after significant improvements in the area due to deep learning, the neural approaches outperform the CSMTiser only by 1 to 2 accuracy points, which is low given a large increase in the complexity of processing (Lusetti et al. 2018, Ruzsics and Samardžić, 2019).

The importance of text normalization can clearly be seen through the improvements in downstream text processing on the basic task of part-of-speech tagging: while 18th century Slovene processed without normalization gives a PoS tagging accuracy of 58%, 93% is achieved on the normalized text. Less drastically but still very noticeably, PoS tagging user-generated content without prior normalization achieves an accuracy of 83%, while normalizing the text prior to tagging produces an accuracy of 89% (Zupan et al., in press).

We expect that new tools and approaches will emerge that will outperform the CSMTiser both in higher accuracy and lower complexity, which is why CLARIN.SI focuses on providing publicly available training datasets. For text normalization, the repository offers datasets for learning normalization of Slovene, Croatian and Serbian user-generated content, as well as datasets for normalizing historical Slovene in two distinct historical periods.

References

- Scherrer, Y., & Erjavec, T. (2016). Modernising historical Slovene words. Natural Language Engineering, 22(6), pp. 881-905.

- Ljubešić, N., Zupan, K., Fišer, D., & Erjavec, T. (2016). Normalising Slovene data: historical texts vs. user-generated content. In Proceedings of the 13th Conference on Natural Language Processing (KONVENS), pp. 146-155, September 19–21, 2016, Bochum, Germany.

- Scherrer, Y., & Ljubešić, N. (2016). Automatic normalisation of the Swiss German ArchiMob corpus using character-level machine translation. In Proceedings of the 13th Conference on Natural Language Processing (KONVENS), pp. 248-255, September 19–21, 2016, Bochum, Germany.

- Tjong Kim Sang et al. (2017). The CLIN27 shared task: Translating historical text to contemporary language for improving automatic linguistic annotation. Computational Linguistics in the Netherlands Journal, 7, 53-64.

- Lusetti, M., Ruzsics, T., Göhring, A., Samardžić, T., & Stark, E. (2018). Encoder-Decoder Methods for Text Normalization. In Proceedings of the Fifth Workshop on NLP for Similar Languages, Varieties and Dialects (VarDial 2018), pp. 18-28.

- Ruzsics, T., & Samardžić, T. (2019). Multilevel Text Normalization with Sequence-to-Sequence Networks and Multisource Learning. arXiv preprint arXiv:1903.11340.

- Zupan, K., Ljubešić, N., & Erjavec, T. (in press). How to Tag Non-standard Language: Normalization vs. Domain Adaptation. Natural Language Engineering.

Click here to read more about Tour de CLARIN