In May 2022, the first CLARIN and Libraries took place in the Hague, Netherlands. The aim was to bring together the CLARIN community and national and academic libraries to present and discuss content delivery systems for researchers. The motivation for the workshop was the sense that various, mostly national, projects were disconnected from each other and also disconnected from research infrastructures such as CLARIN. The workshop resulted in a mailing list, blog post, keynote and a panel discussion at the 2022 CLARIN Annual Conference, two poster presentations, the CENL Dialogue Forum ‘National libraries as data’ and, finally, the plan to organise a second iteration of CLARIN and Libraries, to be hosted in Norway.

CLARIN and Libraries 2023 took place at the National Library of Norway in Oslo, on 5 and 6 December 2023. The main theme of the workshop was ‘large language models and libraries’. The topic was inspired by the advent of generative AI, but with the recognition that libraries have been working on large language models (LLMs) since long before ChatGPT. The idea was to bring together the CLARIN community and national and academic libraries, which provide the fuel to such models, in order to discuss technical and legal aspects of building and using large language models.The workshop brought together people associated with both CLARIN and libraries. There were 27 participants from 15 European countries, a similar amount to the first CLARIN and Libraries workshop. Many of the participants held (often leading) library-based roles.

Library Collections as Data

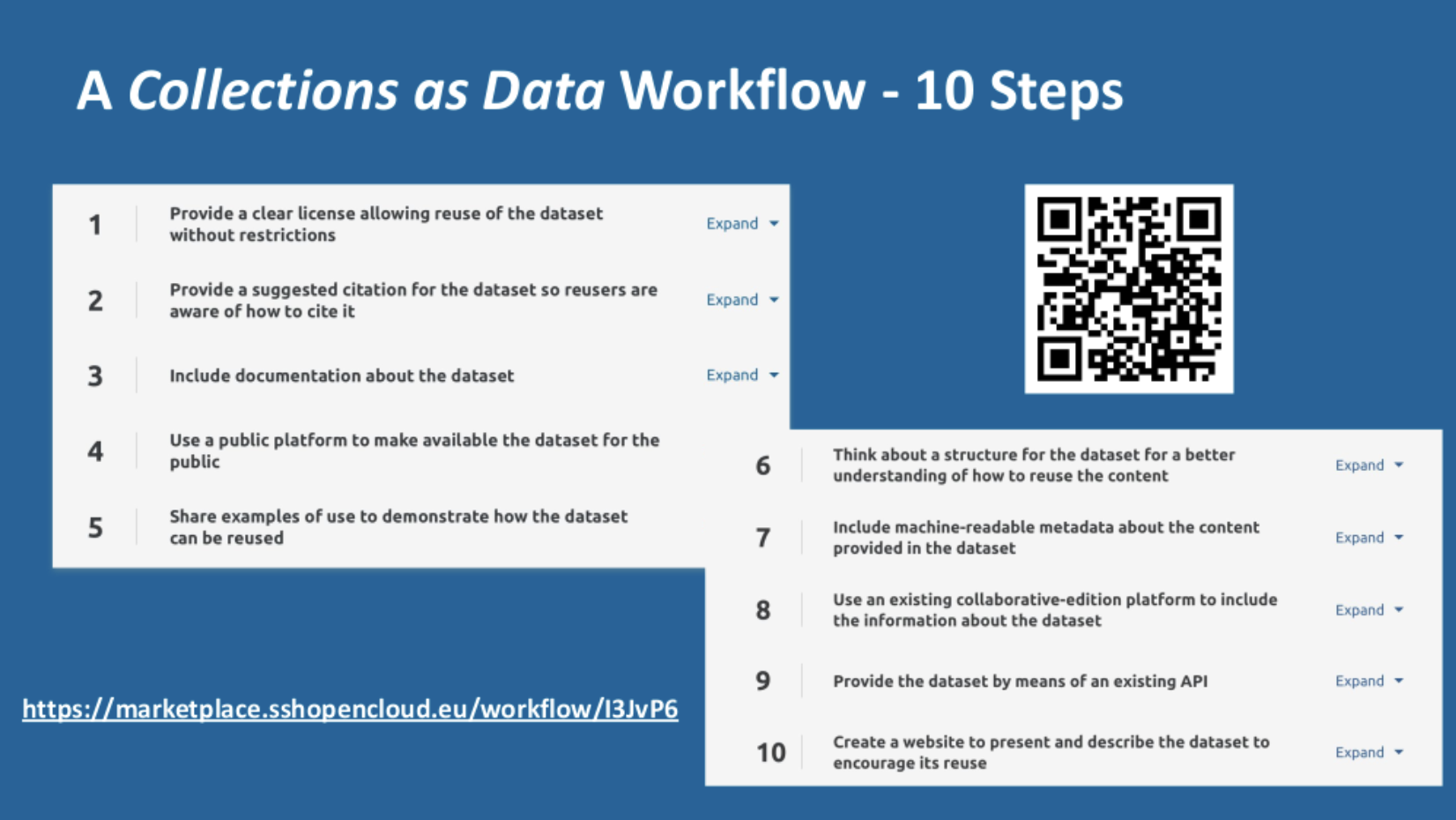

Sally Chambers of KBR, Royal Library of Belgium, and Ghent Centre for Digital Humanities, who is also a Director of DARIAH-EU, opened proceedings with a keynote presentation about the various activities around the Collections as Data project and associated activity, including its implementation at KBR in the DATA-KBR-BE project. This project aims to provide data-level access to the underlying files of digitised and born-digital cultural heritage resources to facilitate data analysis by means of tools and methods developed in the digital humanities. Sally's talk situated LLMs as part of a wider agenda for using library collections as research data. Collections as data checklist and workflow for their publication on the SSH Open Marketplace can be seen as a step towards creating and providing datasets for machine learning.

Building Large Language Models in a Library Setting

National Library of Norway

Javier de la Rosa from the AI-lab at the National Library of Norway (NLN) presented their work on building large language models on library collections. Since 2005, the NLN has digitised almost its entire text collections, amounting at present to a large corpus of 160 billion words for Norwegian, and has built large language models for text and speech on these collections. Javier first gave a general introduction to the foundations of statistical natural language processing, from bag-of-word models to modern transformer models and then introduced NB-BERT, a powerful Norwegian transformer model that can be fine-tuned for different purposes. He also introduced the Norwegian Colossal Corpus (NCC), available on HuggingFace and VLO, upon which NB-BERT was trained. While size matters, quality matters more when training a large language model: the NLN has a huge collection of data, but not all of the objects were usable due to e.g. OCR errors, and had to be re-OCRed. Javier also introduced the NLN's work on automatic speech recognition, using wav2vec2 and Whisper models for Norwegian and Sámi. A large share of the training data came from the Norwegian Language Bank, a CLARIN C-Centre, situated at the NLN. He finally discussed the benefits of the work of the AI-lab: Not only better access to content for users (the main audience of the National Library is the general public), but also the possibility to look at the collections in new ways, and greater focus on the content itself rather than its carrier.

German National Library

Peter Leinen gave a status update on the work on (large) language models at the German National Library. He first introduced the library holdings, currently consisting of 47 million works, of which 12 million are in digital form. Most objects are born-digital – there is almost no digitisation involved. The DSM directive from 2019 and the German copyright law from 2021 permitted the making of copies for textual data mining in a research setting, which was previously not possible. This led to an expansion of indexing activities, the start of DH projects, and the beginning of Text+, a National Research Data Infrastructure in Germany. Today, machine learning is used for subject cataloguing, but it remains a challenge due to the heterogeneous target vocabulary. LLMs are not being built so far, but they are a strategic priority and there are ongoing activities in the form of preparing a first large language model based on the newspaper collection in cooperation with the University of Leipzig, the Academy of Science in Leipzig and the ScaDS.AI (Leipzig/Dresden). Further, there are relevant activities in the form of work on the legal aspects of using LLMs with library data, work on derived text formats and workshops (e.g. the CENL Network Group ‘AI in Libraries’).

Sensitive Data in HPC – How Secure Can It Be?

Martin Mathiesen from CSC IT Center for Science in Helsinki talked about secure data processing in HPC clusters, addressing some misconceptions about what ‘secure processing’ is, using different traffic regulation policies in Finland and Germany as an analogy: there is stricter regulation in Finland than in Germany, but still fewer deaths per inhabitants and vehicle kilometres in Germany. CSC does not allow for the processing of personal data in their clusters and users are forbidden to upload sensitive data. But it is not possible to enforce this: as long as no accidents happen, everything is fine. Instead, certain precautions should be taken: data should be kept only for a short amount of time in order to prevent leaks, and encryption with proper handling of the keys should be used. Further, the systems should be actively hardened and monitored.

Lightning Talks

In a separate call, we invited speakers to give a five-minute lightning talk relevant to LLMs or another aspect of collaboration between libraries and research infrastructures. In total, there were six lightning talks: Juliane Tiemann on the the CLARINO Bergen repository and services, Philipp Conzett on AI integration in the Tromsø Repository of Language and Linguistics (TROLLing), Martin Mathiesen and Johanna Lilja on LLM projects at the National Library of Finland, Neil Fitzgerald on the AI4LAM initiative, Neil Jeffries on the progress of IIIF for text, and Maciej Piasecki on services and LLaM plans from CLARIN-PL.

Legal Aspects of LLMs in Libraries

Jerker Rydén, senior legal advisor from the Swedish Royal Library, was invited to give a keynote on the legal aspects of building and using large language models in an academic library setting, with a special emphasis on copyright and data protection. He gave a brief introduction to relevant EU law, e.g. the DSM directive (especially its TDM exception), the InfoSoc directive, the GDPR, and finally the EU Treaty Article National Security, and argued that control over language models can be in the interest of national security. He also discussed the case of cross-border activities and the question whether exceptions and limitations (E&L) or extended collective licensing (ECL) apply in such cases.

Discussion

Several questions and points concerning the training and use of large language models in libraries were discussed:

- Quality: What do we mean by high quality, e.g. measures to benchmark OCR? When to throw away data?

- Quantity: There is a balance between high-quality data and lots of data, because you will easily run out of data when training large language models.

- Transparency: It is important to know the sources used in the training of a model. Here, libraries play a very important role.

- Application: Why do we need LLMs? In some settings, traditional models fare better. On the other hand, LLMs can replace traditional full-text search with a question prompt to a language model.

- Original texts vs. the model: What is the rights situation concerning the publication of a model trained on copyright protected works?

The workshop was successful in bringing together relevant people working on large language models in libraries and the CLARIN community and discussions are likely to continue in forums such as the Conference of European National Librarians, AI4LAM and at the CLARIN Annual Conference.

More details of the event are available on the event page.